DB Krupp, Queen’s University, Ontario

Our lives are plagued by controversy. We argue about immigration, gun control and gay rights. We argue about climate change, economic inequality and vaccines. We even argue about the geometry of the Earth.

To make sense of this, pundits often appeal to beliefs — lumping people together as “liberal” or “conservative” blocs, say, or as “religious” or “secular” factions. In naming these beliefs, we are given the impression that something has been explained, even when it hasn’t. Labels don’t tell us why we choose sides, and they don’t tell us how to bring those sides closer together.

But we can understand our differences once we recognize that they’re the product of independent minds with independent agendas. Think of each of us as possessing not a compass, but a subconscious calculator that tallies the personal costs and benefits of a decision, and adjusts our moral intuitions accordingly. We then construct a belief that fits our intuitions, and craft an argument to justify this belief.

Equity or equality?

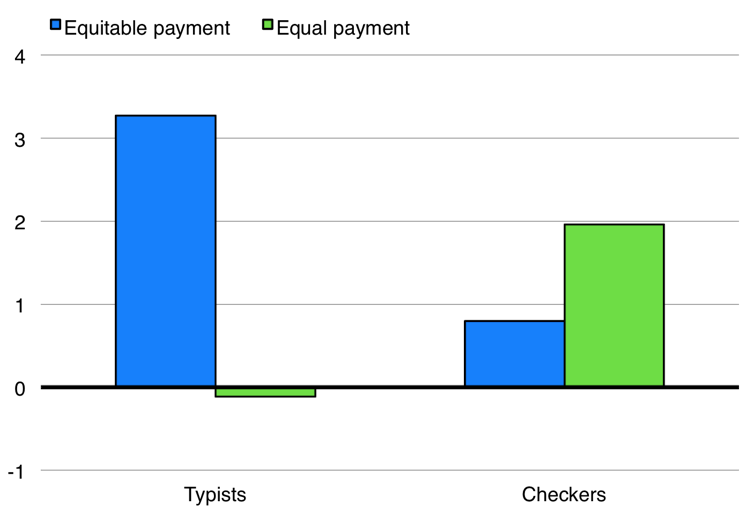

An elegant demonstration of moral accounting concerns the competing principles of equity, in which people who contribute more are rewarded more, and equality, in which everyone is rewarded the same.

In an experiment, Peter DeScioli and colleagues randomly assigned participants to either be “Typists” and transcribe three paragraphs, or to be “Checkers” and transcribe one of the three paragraphs to check for accuracy. Participants were then asked about the fairness of being paid equitably, so that Typists would earn three times more than Checkers, or equally, so that both Typists and Checkers would earn the same amount. Typists believed that equity was the fairer arrangement, whereas Checkers believed that equality was the fairer one. Thus, personal profit and beliefs about fairness coincided neatly.

Modified from DeScioli et al. (2014). Equity or equality? Moral judgments follow the money. Proceedings of the Royal Society B: Biological Sciences, 281, 20142112.

But the distance between Typists and Checkers shrank when the experimenters gave both groups the same amount of work. Once the Typists could no longer justify earning more money, they agreed with the Checkers that equal pay was the fairer option. This implies that moral thinking isn’t merely self-interested — it’s strategic.

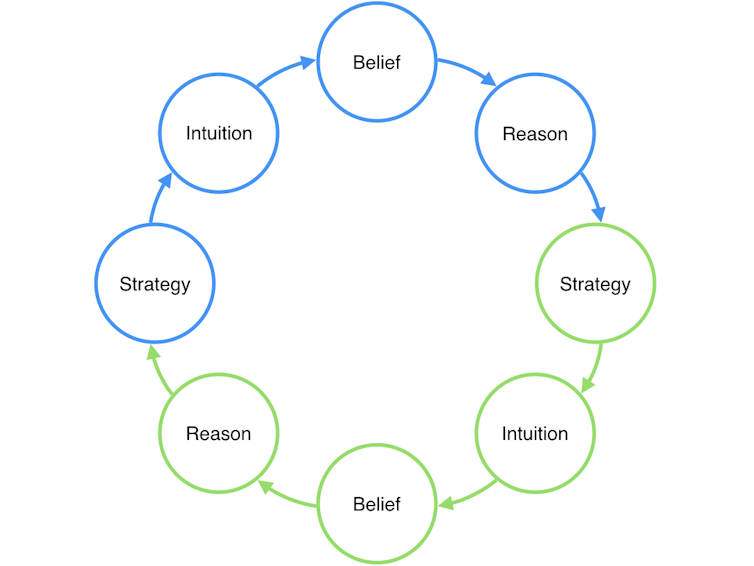

Under most circumstances, you aren’t the only person to hold sway over a decision, and so you must rally others to your side. Other people have their own goals, however, and may need some convincing. This implies that your sense of right and wrong may be a compromise between personal benefit and social persuasion: Your beliefs are a link in a mental chain designed to marshal support in a dispute.

A strategic model of moral psychology. One person (in blue) has a strategy that informs her intuition. This, in turn, informs her beliefs. She then uses reason to justify her beliefs to someone else (in green), in an attempt to bring his beliefs closer to her own. However, he will also be influenced by his own strategy that informs his intuitions, and therefore his beliefs and reasoning.

When worlds collide

While our incentives are not always transparent, research has uncovered the cost-benefit logic of many beliefs. For example, cues of life expectancy inform the calculus of reproductive “scheduling”: Women are predicted to have children earlier when life is short, and to wait when life is long. I have found this reflected in birth and abortion rates: Teen birth rates are highest in Canadian provinces with shorter life expectancies, and abortion rates are highest in provinces with longer life expectancies.

We also know that people are more troubled by stories of incest between a hypothetical pair of siblings if they themselves grew up with an opposite-sex sibling. That people feel a crime is more serious and upsetting if the victim was a close relative. That men are more willing to kill each other if they face high income inequality. And that American and Japanese participants alike behave like “collectivists” or like “individualists” if they are given a clear understanding of the consequences of their actions.

Unfortunately, the beliefs that straddle moral fault lines are largely impervious to empirical critique. We simply embrace the evidence that supports our cause and deny the evidence that doesn’t. If strategic thinking motivates belief, and belief motivates reason, then we may be wasting our time trying to persuade the opposition to change their minds.

Instead, we should strive to change the costs and benefits that provoke discord in the first place. Many disagreements are the result of worlds colliding — people with different backgrounds making different assessments of the same situation. By closing the gap between their experiences and by lowering the stakes, we can bring them closer to consensus. This may mean reducing inequality, improving access to health care or increasing contact between unfamiliar groups.

We have little reason to see ourselves as unbiased sources of moral righteousness, but we probably will anyway. The least we can do is minimize that bias a bit.![]()

DB Krupp, Adjunct Professor of Psychology, Queen’s University, Ontario

This article is republished from The Conversation under a Creative Commons license. Read the original article.